(NewsNation) — Artificial intelligence tools that can be used to generate AI nudity are easy to find online and sometimes even boosted by accounts on social media sites like YouTube and TikTok.

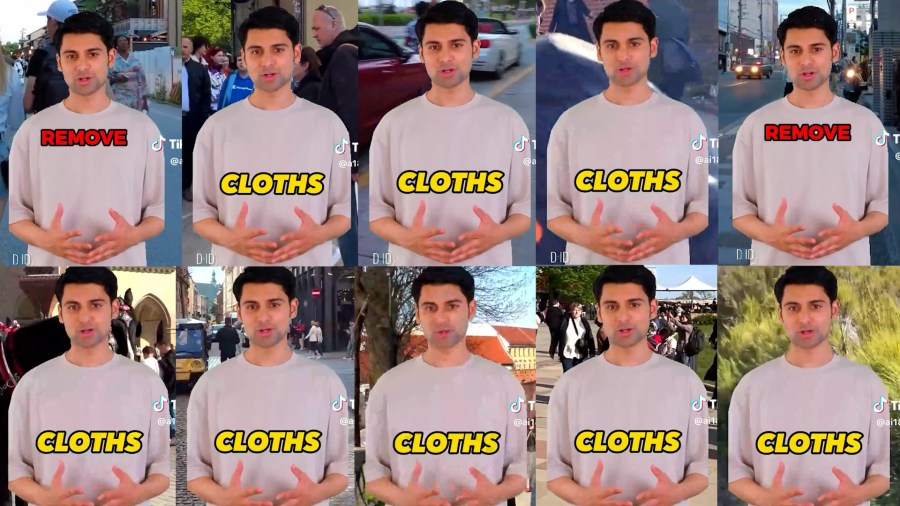

A TikTok search by NewsNation revealed dozens of videos pushing websites that allow users to “remove clothes from any picture,” including those of their “crush.”

“Do you have any picture of your crush?” a man, who appears to be AI-generated, said in a TikTok dated Sept. 18. “I’ve discovered an incredible AI website that I’m sure you’ll find interesting because no girl wants you to know about this tool. You can remove the clothes from any picture.”

NewsNation reviewed other similar videos that have been posted on TikTok in recent months, all of which appear to be AI-generated. Each follows a nearly identical script with the same robotic cadence.

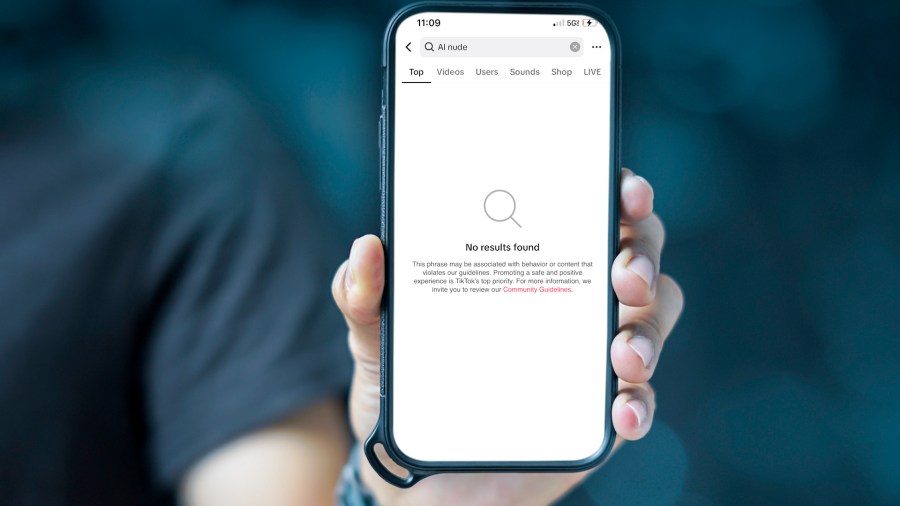

Last week, a TikTok search for AI nudity delivered several results but a day later the platform had blocked the search. Instead, a message read: “This phrase may be associated with behavior or content that violates our guidelines.”

Related search terms, some of which were listed on the app’s “others searched for sidebar,” resurfaced the posts.

Earlier this month, a nude photo controversy in New Jersey amplified concerns about cyberbullying and artificial intelligence.

Students at Westfield High School told the Wall Street Journal that one or more classmates used an online tool to generate AI pornographic pictures of female classmates and then shared them in group chats.

“I am terrified by how this is going to surface and when. My daughter has a bright future and no one can guarantee this won’t impact her professionally, academically or socially,” one 14-year-old student’s mother, Dorota Mani, told the Journal.

With Westfield police still investigating, it’s unclear which AI tool the boys allegedly used, or how they learned about it, although experts say the internet is awash with tutorials and easy-to-use programs.

“There’s a whole ecosystem out there for this stuff,” said Adam Dodge, an attorney whose organization EndTAB is focused on ending technology-enabled abuse.

AI “nudifier” apps and other AI tools can take real images and create naked photos using deep learning algorithms. Those algorithms are typically trained on images of women and allow AI apps to overlay realistic images of naked body parts, even if the person in the source photo is clothed.

The tools are easy to come by but NewsNation found they’re also being pushed by accounts on TikTok and YouTube, two of the most popular social media sites for U.S. teens, according to Gallup.

When reached by email, TikTok responded by removing the two clips flagged by NewsNation, citing its AI-generated content policy, which requires manipulated media to be clearly disclosed.

A spokesperson also highlighted the company’s sexual exploitation guidelines which prohibit users from editing another person’s image to sexualize them.

Videos uploaded to TikTok are initially reviewed by automated technology and then moderators work alongside those systems to take into account additional context, per the company’s guidelines. Content and accounts that violate those rules are supposed to be taken down.

The company did not specifically explain how it intends to crack down on AI-generated content that directs users to AI nudifier tools, but noted the low number of views on the posts NewsNation highlighted.

Dozens of similar videos posted on TikTok from several accounts plugging AI clothing removal websites remained up as of Thursday. The number of views on those posts ranged from hundreds to several thousand.

On YouTube — where the average American teen spends roughly two hours a day — videos that show users how to generate “Not safe for work (NSFW) AI images” have amassed hundreds of thousands of views.

When NewsNation searched the site for AI content, the popular video and social media platform, which Google owns, had a sponsored post at the top of its page promoting an “AI clothes remover” promising the “best AI deep nudes.”

Google did not respond when asked whether the sponsored post or tutorial videos violated its guidelines, which ban “explicit content” that’s meant to be “sexually gratifying.”

Dodge thinks social media companies need to do more to protect users, especially kids.

“They have not acted quickly enough or with enough intent, in my opinion,” he said. “They can use their algorithm, they can use AI to identify and remove these videos in real-time if they wanted to.”

This week, YouTube unveiled new rules that will require video-makers to disclose when they’ve created altered or synthetic content that looks real. Like TikTok, Google relies on a combination of AI technology and real people to enforce their community guidelines.

The policy comes amid a rapidly changing AI ecosystem, where deciphering real from fake has never been more challenging.

Dodge founded EndTAB in 2019 to educate people about threats online, and since then, AI tools have evolved with non-consensual deepfake pornography growing even more pervasive.

“It’s everywhere, and if you’re looking for it, very easy to find,” Dodge said. “Even if you’re not looking for it, you’re likely to be exposed to it in one way, shape, or form.”

The number of deepfake videos online has exploded in recent years, roughly doubling every six months from 2018 to 2020, according to Sensity AI, a company that detects synthetic media. The vast majority of deepfakes, around 96%, are non-consensual pornography, Sensity AI has found.

Over the summer, an artist in Lansing, Michigan was shocked to receive a nude image of herself from a complete stranger.

“I posted a picture of myself fully clothed, and here is the same picture of me nude,” Mila Lynn told NewsNation affiliate WLNS.

As technology rapidly evolves, governments are still playing catch up. In the U.S., there are no federal regulations on deepfakes, however, recent proposals could soon change that. In the meantime, some states have acted on their own.

Earlier this year, Illinois lawmakers passed legislation to crack down on deepfakes. New York recently passed a similar ban. In Indiana, lawmakers are working on updating the state’s child pornography law to make sure it encompasses AI-generated images.

But even as additional guardrails are added, Dodge said it’s important for parents to teach their children about threats online.

“[Kids] know to wait for the light to turn green before they cross the street,” he said. “They know that because their parents have taught them how to do that…we just need to take that approach and apply it to their online lives.”